On Sunday, an ABC/Washington Post poll gave us all heart attacks when it showed President Biden trailing former President Trump by ten percentage points. Responsibly, the Post and ABC took pains to say that that result was an “outlier.” But, more than a year before the 2024 election—before any of Trump’s trials or jury verdicts, before House Republicans do or don’t impeach Biden, before another sure-to-be-controversial Supreme Court term, and who knows what else—pretty much every major media outlet has weighed in with headline-grabbing polls showing Trump and Biden to be running even.

All of this has created enormous panic – both from Democratic partisans, and from everyone else who dreads a second (and forever) Trump Administration. Could it really be true that Americans are more likely to elect Trump after he tried to overthrow the election than before?

If you share this panic, you might be suffering from Mad Poll Disease. Symptoms include anxiety, problems sleeping, loss of affect, and feelings of helplessness about the future of democracy, which are only exacerbated by frantic Twitter exchanges about polling methodology and sample bias.

Today, I want to show that, regardless of the methodology, pollster, or publication, horse race polls— more formally known as “trial heats,” which ask respondents whom they intend to vote for—are worse than useless. This is especially true more than a year ahead of the election – but, as I’ll explain, it’s also true in the weeks and months before.

Horse Race Polling Is Punditry in Disguise

Imagine that in September 2016 you were watching a panel with five pundits discussing the presidential race in Florida. The first says they think that Clinton is up by 4 points, the second thinks she is ahead by 3, another two think she’s only ahead by 1 point, and the fifth thinks Trump is ahead by 1 point.

Because I used the words “pundit” and “think,” you have no trouble understanding that those were opinions. But since polling glosses itself with scientific veneer, many people don’t understand that polling is also dependent on the opinions of the pollster! To do a poll, pollsters have to make their best guess about who will eventually vote, and then “weight” the survey results they get to match the demographic composition of the electorate they expect on Election Day.

The pundit panel I described above actually happened—with pollsters—thanks to one of the most useful pieces of political data journalism ever in The New York Times. The Times asked four respected pollsters to independently evaluate the same set of survey data to estimate the margin of victory for Clinton or Trump in Florida. The result: including the Times’s own assessment, the same survey produced estimates ranging from Clinton +4 to Trump +1. The 5-point range had absolutely nothing to do with a statistical margin of error, and everything to do with the opinions each pollster had about who was going to vote. (Forty-nine days after that piece was published, Trump won Florida by 1 and a half points.)

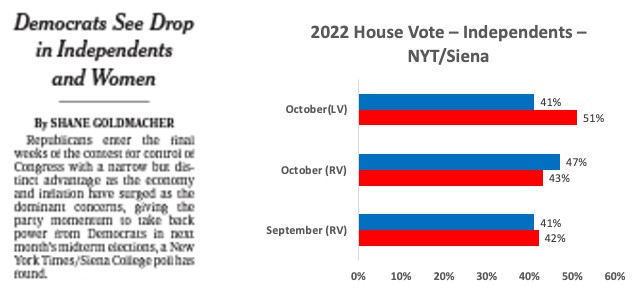

But that lesson didn’t stick in The New York Times’s own coverage. In October 2022, the Times blew up the then-conventional wisdom that House Democrats were competitive when it released its survey showing that Republicans had a nearly 4-point lead in the House—an almost 6-point swing since their last poll, just a month before.

The problem? Bear with me. In September, the Times made no assumptions about who would vote; it was a survey of registered voters. That survey found House Democrats leading by 2 points. Then, an October interview of registered voters found that the race was tied. But instead of reporting an apples-to-apples comparison that showed Democrats had lost 2 points among registered voters, the Times reported that among likely voters, Republicans now led by nearly 4 points. The Times’s pollsters basically conjured a dramatic 6-point surge to Republicans out of thin air by applying a different model to the October data than they had in September.

Their front-page headline also declared that Democrats were losing ground with independents and women. But here’s the thing: Independent voters hadn’t changed their minds; The New York Times changed its mind about which independents would vote. An apples-to-apples comparison of registered voters from that same October survey would have shown not a 9-point net drop for Democrats among Independents since September, but the opposite–a 5-point net gain for Democrats.

In every other context, reporters go out of their way to attribute opinions to sources. But political journalists report their survey results as mathematical facts—which makes it more difficult, especially for general readers, to remember the caveats that should accompany all polling results. Any time you read a sentence like “38 percent of white voters support Biden,” it actually means “our survey found that 38 percent of white voters support Biden.”

Unfortunately, the media is driving us into an epistemological cul de sac where what’s seen in surveys is presumptively more “true” than other evidence (such as administrative records, other sources of data, real-world observations, and more). To be sure, polling can offer important insights. But, to be useful, the results must always be placed in dialogue with other imperfect sources of signals about the electorate. Our confidence in any particular proposition should depend on the number and credibility of independent sources of evidence corroborating the proposition. By failing to meet this standard, the media has become a reckless super-spreader of Mad Poll Disease.

At the same time that media institutions are leading with dire warnings based on their own polling, they are all but ignoring other data showing remarkable Democratic strength in special elections, which has historically been an important harbinger of partisan enthusiasm. Democrats have been significantly overperforming their partisan index in special election after special election. And that’s not even counting Wisconsin Supreme Court Justice Janet Protasiewicz’s 11-point statewide victory in what was technically a non-partisan race, but was well understood by voters in Wisconsin as a MAGA versus anti-MAGA contest.

Horse Race Polling Can’t Tell Us Anything We Don’t Already Know

To begin to cure Mad Poll Disease, make this your mantra: Horse race polling can’t tell us anything we don’t already know before Election Day about who will win the Electoral College.

All we know, or can know, is this:

1. A popular vote landslide is very unlikely.

America is a rigidly divided nation in which the last six presidential elections have been decided by an average of 3 points, and, since 1996 (other than 2008), none have been decided by as much as 5 points.

2. The Electoral College is too close to call.

The Electoral College will almost certainly be decided by which candidate wins at least Georgia or Pennsylvania, plus two out of three of the other battleground states: Arizona, Michigan, and Wisconsin. (In a few less likely scenarios, Democrats would need to hold onto Nevada as well.) In both 2016 and 2020, the margin of victory in most of these five states was less than 1 point. Yet FiveThirtyEight found that the best polling had differed from the actual results in 2022 by only 1.9 percentage points on average. In other words, in 2016 and 2020, first Trump and then Biden won the states they needed to win the Electoral College by margins too small for the “best” polling to detect in the weeks before the midterms, when tens of millions of people had already voted.

3. Whether the anti-MAGA vote turns out again in the battleground states will determine the winner.

Unlike a voter’s choice between Biden and Trump—which hasn’t changed much in the last several years and is very unlikely to change in the next one—those who do not vote in every election are notoriously poor at forecasting their own behavior even a month before the election.

Since 2016, Arizona, Georgia, Michigan, Pennsylvania, and Wisconsin have been a continuous ground zero for referendums on MAGA candidates. When the stakes of electing a MAGA candidate are clear to voters in those states, MAGA consistently loses. That’s why, in the 2022 midterms, the Red Wave never happened in those states. When Trump won all five of those states in 2016, Republicans had state government trifectas in four of the states, as well as six of the 10 U.S. Senators. Since 2016, Trump and other MAGA candidates have lost 23 of the 27 presidential, Senate, and governors’ races in those states and only Georgia’s state government still has a Republican trifecta.

If polls taken weeks or months before the election can’t tell us anything useful about close races (which, again, are the only races that matter in our current system), why on earth would we pay attention to polls taken more than a year out?

Change the Channel!

If you are worried that you will miss something crucial by ignoring the polls, consider the following.

The FiveThirtyEight forecast for the governors’ races on Election Day last year favored the loser in two of the five states that will decide the Electoral College and, likely, control of the Senate, and their forecast for the Senate races on Election Day last year favored the loser in three of the five battleground Senate races.

I’m not saying that FiveThirtyEight did a poor job; indeed, FiveThirtyEight has been essential in modeling best practices and data transparency, and serves as an important check on unscrupulous claims by outlier pollsters. I’m saying that, when elections are very close, it’s simply not possible for any polls or forecasting to tell us anything more specific than “the race will be close.” They’re just not accurate enough; it’s like trying to look for bacteria using a magnifying glass.

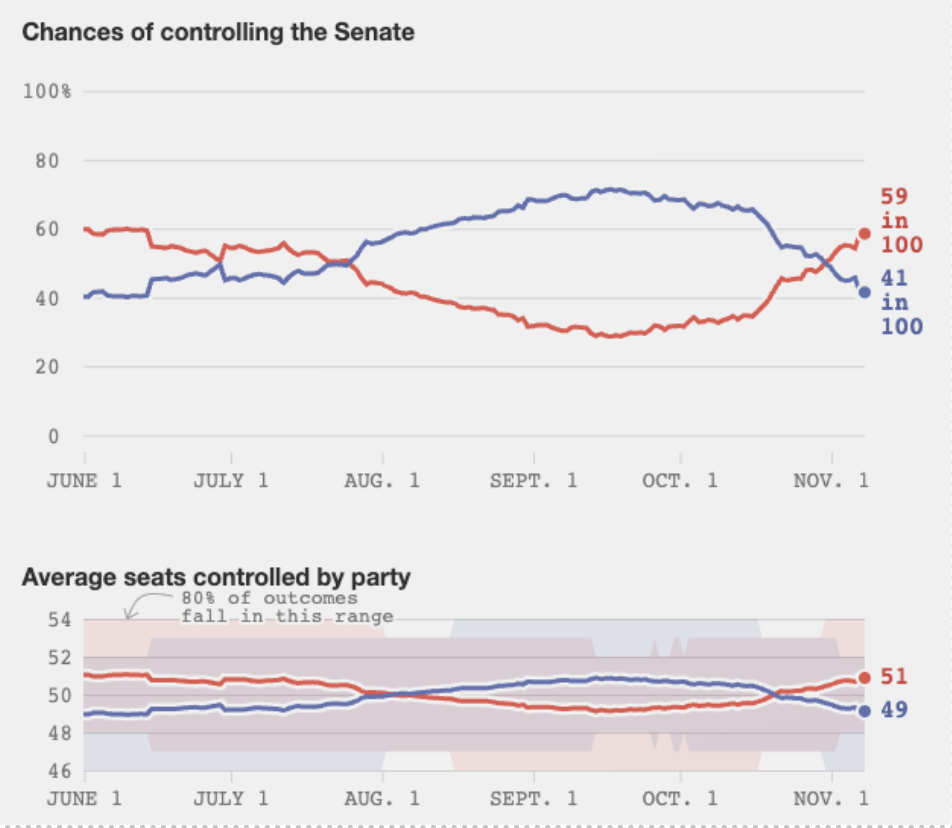

Now let’s look at FiveThirtyEight’s 2022 Senate forecast. Notice that it began five months before the election, or nine months closer to the election than we are today. In those five months, the odds of Republicans winning a Senate majority started at 60 percent, fell to 30 percent, and then rebounded to 59 percent the day of the election. Then, voters thwarted those expectations by increasing Democrats’ Senate majority, which was obviously even less probable than them simply holding their 50 seats.

Furthermore, the same five Senate races in battleground states mentioned above that were actually close on Election Day were also considered competitive a year earlier, when The Cook Political Report with Amy Walter issued its November 2021 set of race ratings. There is nothing that horse race polling could have told you that you didn’t already know.

(The Media Can) Never Tell Us the Odds

NYU journalism professor Jay Rosen argues, correctly, that the media should cover the stakes of an election rather than the odds, because the stakes are more important. To this, I would add that the media cannot cover the odds in a way that gives voters meaningful information—so the stakes are all we have left.

If you are old enough to remember what happened in November 2022, you should be profoundly skeptical of what the polling is telling us now about November 2024. Polling failed to anticipate the anti-MAGA dam that held back the Red Wave, partly because polling cannot tell us in advance who will turn out to vote. This matters because, as I’ve explained before in the Monthly, Democrats turned out in higher numbers in 15 states where the MAGA threat seemed more salient—but in the 35 states that lacked high-profile, competitive MAGA candidates, we saw the expected Red Wave.

It’s not unreasonable to imagine that if House races in “safe” blue states like California, New Jersey, and New York had been covered the same way as Senate races in swing states—with a constant emphasis that control of the chamber was at stake—the results in the House could have been quite different, too.

While the mainstream media cannot tell us what to think about this or that issue, it has a powerful influence on what we think about.

Case in point. In 1974, before the rise of the polling-industrial complex, the midterms were about Watergate—and even though Republicans had mostly abandoned Nixon, they still paid a steep electoral price, losing 49 seats. If the 2022 midterms had been covered in the same way, the central question would have properly been, “Will voters hold Republicans accountable for their efforts to overturn the election?”

But, after January 6th, most political reporters didn’t even entertain the notion that the midterms could be about Trump/MAGA. Instead—made savvy by academic research about how midterms are always thermostatic elections—they regularly insisted that, according to their polls, voters only cared about rising prices and crime.

Einstein said, “It is the theory which decides what can be observed.” So, even after the January 6th hearings began, the media continued to discount the idea that the midterms could be another referendum on Trump and MAGA, relying on polls that showed that the hearings were not substantially increasing the number of Americans who thought Trump was guilty. They couldn’t “observe” the fact that the hearings were re-energizing infrequent anti-MAGA voters who already believed Trump was guilty, and were convincing them of the importance of keeping his MAGA fellow travelers out of office in their states.

I am not arguing that journalists have a responsibility to help Democrats get elected; I am arguing that journalists’ most important, First-Amendment-justifying responsibility is to give voters the information they need to be democratic citizens. Instead, the fraternity of leading media pollsters (and it is, sadly, pretty much a fraternity) judge themselves after an election by how well they anticipated what voters would do rather than by how well informed they were, or what they actually cared about. Thus, there has been no public soul searching about how to better understand what motivates voters even after this latest epic miss.

Margin of Error or Margin of Effort?

Enough of the statistical stuff. Stressing over polling makes us think election outcomes are like the weather—something that happens to us. In reality, election outcomes are what we make happen—especially in the battleground states, which are so evenly and predictably divided.

Remember: any election within the margin of error is also within the margin of effort—the work we must always put in to get enough of those who dread a MAGA future to turn out to vote. The only FDA-approved cure for Mad Poll Disease is to pay attention to what matters: the ongoing MAGA threat.